Dex-Net

Publications

Code

Data

The Dexterity Network (Dex-Net) is a research project including code, datasets, and algorithms for generating datasets of synthetic point clouds, robot parallel-jaw grasps and metrics of grasp robustness based on physics for thousands of 3D object models to train machine learning-based methods to plan robot grasps. The broader goal of the Dex-Net project is to develop highly reliable robot grasping across a wide variety of rigid objects such as tools, household items, packaged goods, and industrial parts.

Dex-Net 2.0 is designed to generated training datasets to learn Grasp Quality Convolutional Neural Networks (GQ-CNN) models that predict the probability of success of candidate parallel-jaw grasps on objects from point clouds. GQ-CNNs may be useful for quickly planning grasps that can lift and transport a wide variety of objects a physical robot. Dex-Net 2.1 adds dynamic simulation with pybullet and extends the robust grasping model to the sequential task of bin picking. Dex-Net 3.0 adds support for suction-based end-effectors. Dex-Net 4.0 unifies the reward metric across multiple grippers to efficiently train "ambidextrous" grasping policies that can decide which gripper is best for a particular object. Dex-Net 1.0 was designed for distributed robust grasp analysis in the Cloud across datasets of over 10,000 3D mesh models.

The project was created by Jeff Mahler and Prof. Ken Goldberg and is currently maintained by the Berkeley AUTOLAB. For more info, contact us.

Project Links

Datasets

- GQ-CNN Training Datasets

- Pre-trained GQ-CNN Models

- Object Mesh Dataset v1.1

- HDF5 Database of 3D Objects, Parallel-Jaw Grasps for YuMi, and Robustness Metrics

Code

Other

News Coverage

Learning Ambidextrous Robot Grasping Policies

Jeffrey Mahler, Matthew Matl, Vishal Satish, Michael Danielczuk, Bill DeRose, Stephen McKinley, Ken Goldberg

Science Robotics

[Paper] [Supplement] [Summary] [Raw Data, Experiments, and Analysis Code] [Experiment Object Listing] [Bibtex]

Overview

Universal picking (UP), or reliable robot grasping of a diverse range of novel objects from heaps, is a grand challenge for e-commerce order fulfillment, manufacturing, inspection, and home service robots. Optimizing the rate, reliability, and range of UP is difficult due to inherent uncertainty in sensing, control, and contact physics. This paper explores “ambidextrous” robot grasping, where two or more heterogeneous grippers are used. We present Dexterity Network (Dex-Net) 4.0, a substantial extension to previous versions of Dex-Net that learns policies for a given set of grippers by training on synthetic datasets using domain randomization with analytic models of physics and geometry. We train policies for a parallel-jaw and a vacuum-based suction cup gripper on 5 million synthetic depth images, grasps, and rewards generated from heaps of three-dimensional objects. On a physical robot with two grippers, the Dex-Net 4.0 policy consistently clears bins of up to 25 novel objects with reliability greater than 95% at a rate of more than 300 mean picks per hour.

Learning Deep Policies for Robot Bin Picking by Simulating Robust Grasping Sequences

Jeffrey Mahler, Ken Goldberg

CoRL 2017

[Paper] [Supplement] [Training Datasets] [Trained GQ-CNNs] [Experiments] [Bibtex]

Overview

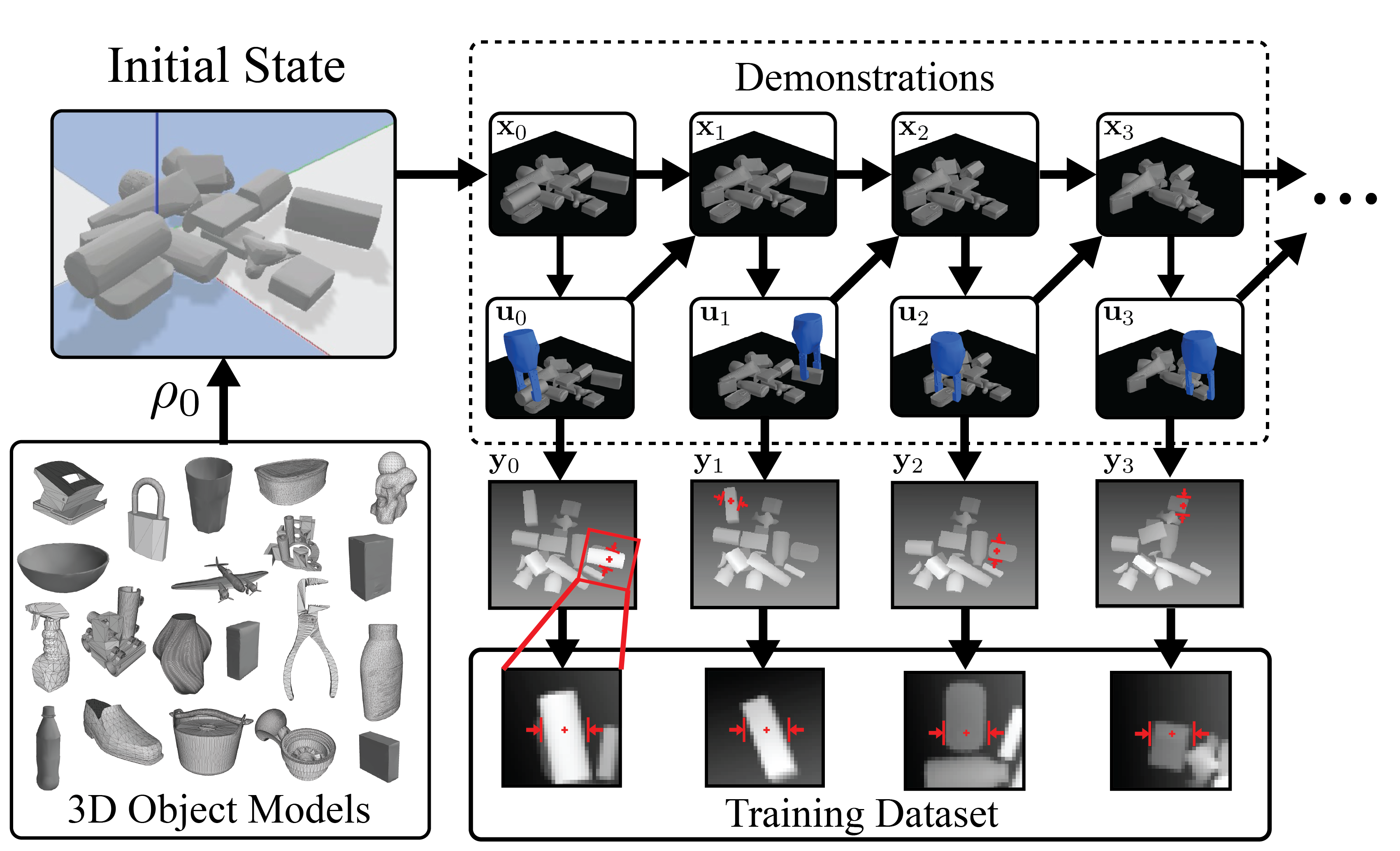

Recent results suggest that it is possible to grasp a variety of singulated objects with high precision using Convolutional Neural Networks (CNNs)trained on synthetic data. This paper considers the task of bin picking, where multiple objects are randomly arranged in a heap and the objective is to sequentially grasp and transport each into a packing box. We model bin picking with a discrete-time Partially Observable Markov Decision Process that specifies states of the heap, point cloud observations, and rewards. We collect synthetic demonstrations of bin picking from an algorithmic supervisor uses full state information to optimize for the most robust collision-free grasp in a forward simulator based on pybullet to model dynamic object-object interactions and robust wrench space analysis from the Dexterity Network (Dex-Net) to model quasi-static contact between the gripper and object. We learn a policy by fine-tuning a Grasp Quality CNN on Dex-Net 2.1 to classify the supervisor's actions from a dataset of 10,000 rollouts of the supervisor in the simulator with noise injection. In 2,192 physical trials of bin picking with an ABB YuMi on a dataset of 50 novel objects, we find that the resulting policies can achieve 94% success rate and 96% average precision (very few false positives) on heaps of 5-10 objects and can clear heaps of 10 objects in under three minutes.

Dex-Net 3.0: Computing Robust Robot Suction Grasp Targets using a New Analytic Model and Deep Learning

Jeffrey Mahler, Matthew Matl, Xinyu Liu, Albert Li, David Gealy, Ken Goldberg

ICRA 2018

[Paper] [Supplement] [Extended Version (arXiv)] [Dataset] [Pretrained GQ-CNN] [Experiments] [Video: Model] [Video: YuMi] [Bibtex]

Overview

Suction-based end effectors are widely used in industry and are often preferred over parallel-jaw and multifinger grippers due to their ability to lift objects with a single point of contact. This ability simplifies planning, and hand-coded heuristics such as targeting planar surfaces are often used to select suction grasps based on point cloud data. In this paper, we propose a compliant suction contact model that computes the quality of the seal between the suction cup and target object and determines whether or not the suction grasp can resist an external wrench (e.g. gravity) on the object. To evaluate a grasp, we measure robustness to perturbations in end-effector and object pose, material properties, and external wrenches. We use this model to generate Dex-Net 3.0, a dataset of 2.8 million point clouds, suction grasps, and grasp robustness labels computed with 1,500 3D object models and we train a Grasp Quality Convolutional Neural Network (GQ-CNN) on this dataset to classify suction grasp robustness from point clouds. We evaluate the resulting system in 375 physical trials on an ABB YuMi fitted with a pneumatic suction gripper. When the object shape, pose, and mass properties are known, the model achieves 99% precision on a dataset of objects with Adversarial geometry such as sharply curved surfaces. Furthermore, a GQ-CNN-based policy trained on Dex-Net 3.0 achieves 99% and 97% precision respectively on a dataset of Basic and Typical objects.

Dex-Net 2.0: Deep Learning to Plan Robust Grasps with Synthetic Point Clouds and Analytic Grasp Metrics

Jeffrey Mahler, Jacky Liang, Sherdil Niyaz, Michael Laskey, Richard Doan, Xinyu Liu, Juan Ojea, Ken Goldberg

RSS 2017

[Paper] [Supplement] [Extended Version (arXiv)] [ICRA 2017 LECOM Workshop Abstract] [Code] [Datasets] [Pretrained GQ-CNN] [Video] [Bibtex]

Overview

To reduce data collection time for deep learning of robust robotic grasp plans, we explore training from a synthetic dataset of 6.7 million point clouds, grasps, and robust analytic grasp metrics generated from thousands of 3D models from Dex-Net 1.0 in randomized poses on a table. We use the resulting dataset, Dex-Net 2.0, to train a Grasp Quality Convolutional Neural Network (GQ-CNN) model that rapidly predicts the probability of success of grasps from depth images, where grasps are specified as the planar position, angle, and depth of a gripper relative to an RGB-D sensor. Experiments with over 1,000 trials on an ABB YuMi comparing grasp planning methods on singulated objects suggest that a GQ-CNN trained with only synthetic data from Dex-Net 2.0 can be used to plan grasps in 0.8s with a success rate of 93% on eight known objects with adversarial geometry and is 3x faster than registering point clouds to a precomputed dataset of objects and indexing grasps. The GQ-CNN is also the highest performing method on a dataset of ten novel household objects, with zero false positives out of 29 grasps classified as robust (100% precision) and a 1.5x higher success rate than a registration-based method.

Code and Data

We are planning on releasing the code and dataset for this project over summer 2017 with the following tentative release dates:

- GQ-CNN Package: June 20, 2017. Dex-Net 2.0 GQ-CNN training dataset with 6.7 million datapoints and ROS integration. The gqcnn package is now available at https://github.com/BerkeleyAutomation/gqcnn with an example ROS service for grasp planning.

- Dex-Net Object Mesh Dataset v1.1: July 12, 2017. The subset of 1,500 3D object models from Dex-Net 1.0 used in the RSS paper, labeled with parallel-Jaw grasps for the ABB YuMi. The dataset and dex-net Python API for manipulating the dataset are now available here.

- Dex-Net as a Service: Fall 2017. HTTP web API to create new databases with custom 3D models and compute grasp robustness metrics.

Dex-Net 1.0: A Cloud-Based Network of 3D Objects for Robust Grasp Planning Using a Multi-Armed Bandit Model with Correlated Rewards

Jeff Mahler, Florian Pokorny, Brian Hou, Melrose Roderick, Michael Laskey, Mathieu Aubry, Kai Kohlhoff, Torsten Kroeger, James Kuffner, Ken Goldberg

ICRA 2017 (Finalist, Best Manipulation Paper)

Overview

We present Dexterity Network 1.0 (Dex-Net), a new dataset and associated algorithm to study the scaling effects of Big Data and cloud computation on robust grasp planning. The algorithm uses a Multi-Armed Bandit model with correlated rewards to leverage prior grasps and 3D object models in a growing dataset that currently includes over 10,000 unique 3D object models and 2.5 million parallel-jaw grasps. Each grasp includes an estimate of the probability of force closure under uncertainty in object and gripper pose and friction. Dex-Net 1.0 uses Multi-View Convolutional Neural Networks (MV-CNNs), a new deep learning method for 3D object classification, as a similarity metric between objects and the Google Cloud Platform to simultaneously run up to 1,500 virtual machines, reducing experiment runtime by three orders of magnitude. Experiments suggest that prior data can speed up robust grasp planning by a factor of up to 2 on average and that the quality of planned grasps increases with the number of similar objects in the dataset. We also study system sensitivity to varying similarity metrics and pose and friction uncertainty levels.

Code and Data

The code for this project can be found on our github page. This code is deprecated as of May 2017 and will be updated in the Dex-Net 2.0 codebase (see above).

Contributors

This is an ongoing project at UC Berkeley with active contributions from:

Jeff Mahler, Matt Matl, Bill DeRose, Jacky Liang, Alan Li, Vishal Satish, Mike Danielczuk and Ken Goldberg.

Past contributors include:

Florian Pokorny, Brian Hou, Sherdil Niyaz, Xinyu Liu, Melrose Roderkick, Mathieu Aubry, Michael Laskey, Richard Doan, Brenton Chu, Raul Puri, Sahanna Suri, Nikhil Sharma, and Josh Price.

Acknowledgements

This research was performed at the AUTOLAB at UC Berkeley in affiliation with the Berkeley AI Research (BAIR) Lab, the Real-Time Intelligent Secure Execution (RISE) Lab, and the CITRIS People and Robots (CPAR) Initiative. The authors were supported in part by the U.S. National Science Foundation under NRI Award IIS-1227536: Multilateral Manipulation by Human-Robot Collaborative Systems, the Department of Defense (DoD) through the National Defense Science & Engineering Graduate Fellowship (NDSEG) Program, the Berkeley Deep Drive (BDD) Program. This work was also supported in part by donations from Siemens, Google, Amazon Robotics, Toyota Research Institute, Autodesk, ABB, Samsung, Knapp, Loccioni, Honda, Intel, Comcast, Cisco, Hewlett-Packard and by equipment grants from Photoneo, Nvidia, and Intuitive Surgical. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Sponsors.

Support or Contact

Please Contact Jeff Mahler (email) or Prof. Ken Goldberg (email) of the AUTOLAB at UC Berkeley.